This article describes some of the techniques and approaches I used to reverse engineer the out-of-the-box File Picker to create my own.

What happens when you compile all the migration best practices articles you can find — compiled in a single article.

Visual Studio Code has a cool feature that allows you to edit with multiple cursors at once. Find out how to use this feature.

People don’t always find content by searching. For example, if you’ve ever tried to find something but didn’t know what keyword to use in your search query, you’ll appreciate how challenging it can be. Find the 3 ways users find content and how your Information Architecture can help people find what they need — even when they don’t know what they’re looking for.

Autism isn’t necessarily a life sentence that means you’ll live in your parents’ basement. I have lived with autism and managed to turn my quirks into perks, and made a career as an IT consultant from it.

Today’s guest blogger, Hugo’s evil twin, will give you tips to make a migration project take as long as possible so that you can make as much money as possible while doing nothing.

In my previous post, I recommended adding the Fira Code font in your Cmder command prompt. As Sam Culver pointed out, it makes a great font for Visual Studio Code as well. Find out how to configure VSCode to use Fira Code as your default font.

I asked the SharePoint Developer Community what development tools they use on their workstations to develop SPFx solutions. They came through!

SharePoint Online’s maximum file URL path is 400 characters. Find out why you should plan your file migration to SharePoint so that the 400-character limit doesn’t cause issues.

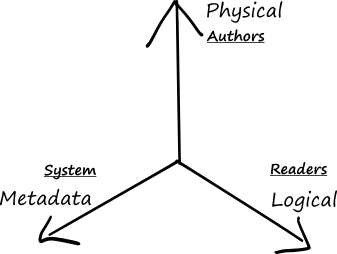

The physical dimension is for authors, logical is for readers. Find how the metadata dimension helps your users accomplish their goals.

When building your SharePoint Information Architecture, the logical IA can be very different than the physical IA. Find how you can build a logical IA that will make your users think that SharePoint was built specifically for them.

The SharePoint Development Community consists of Microsoft employees and non-Microsoft people who work together (often on their own time) to help. Please be kind to them, they are people too!!! #SPFx, #SharePoint, #Office365 #SPDevWeekly

A good SharePoint Information Architecture isn’t built like a giant file share. You should use 3 dimensions. Find out how what those dimensions are, and how to go about starting an awesome IA your users will love.

“One size fits all” doesn’t work for Information Architecture. Especially not in #SharePoint. You need to create an IA that suits all your users while being able to adapt to your changing business needs. Find out why your first instinct for an Information Architecture may not be the right one.

People don’t read online, they scan. Your SharePoint news posts are important, but they don’t stand a chance when competing for attention. Find out how the average length of Fortune 1000 company names affect how people will read your headlines.

People often blame SharePoint because they can’t find content, when they really should blame a poorly-designed Information Architecture. In this first of a series on IA, learn about Data, Information, and Knowledge and how understanding the difference between them leads to better content structure.

What if you could set your #SPFx web part’s default properties based on a user’s name, language, role, or preferences when they add your web part to a page? How about changing the default based on the SharePoint environment, current language, or current date and time?

If you’ve tried calling external APIs from within your SPFx component, you may have run into an issue due to CORS. This article explains what CORS is, and how to prevent the issue.

Find out about a cool helper function that makes it easy to combine CSS classes dynamically — and it is built-in your SPFx solution already!

Introduction Today, I was moving my files to my new Surface Studio 2 (which is an awesome development machine!); All my personal files are synched to OneDrive, except for my Visual Studio and GitHub project files which are — by default — stored in c:\users[myuseraccount]\source\repos. Synching your personal files to OneDrive makes it really easy to work on multiple devices or making sure that you have a backup in case your workstation is stolen, lost, self-destroyed, or abducted by aliens. Making sure that your project files are also synched ensures that all those prototypes, proofs of concepts, and other code snippets that you never bothered adding to source control are also safe. This article describes the steps to move your default project location to a folder that can be stored in OneDrive. Let’s make one thing clear: synching your project files to OneDrive does not replace using source control; if you have…

A while ago, I wrote an article describing how you can inject a custom CSS stylesheet on SharePoint modern pages using an SPFx application extension. The code sample is now part of the SharePoint SP-Dev-Fx-Extensions repository on GitHub.

Since the article, I have been getting tons of e-mails asking all sorts of questions about the solution.

Since SPFx 1.6 was released, I took the opportunity to upgrade the solution to the latest and greatest version of the toolset. You can find the latest code on GitHub, or download the latest SharePoint package.

In this post, I’ll (hopefully) answer some questions about how to use it.

Introduction A week ago, Microsoft officially released the SharePoint Framework Package v1.5, introducing new awesome features like the Developer Preview of Dynamic Data and the ability to create solutions with beta features by adding –plusbeta to the Yeoman command — among other features. While it isn’t necessary to update your existing SPFx solutions, you may need to do so (let’s say, because you have an existing solution that needs a feature only available in SPFx 1.5, for example). Unfortunately, the solution upgrade process between versions of SPFx is often painful. Thankfully, there is an easy way to do this now! This article explain a (mostly) pain-free to upgrade your SPFx solution. Waldek explains this process in details, but this is a summary of how to do it. Office 365 CLI Office 365 CLI is a cross-platform command-line interface (at least, that’s what I think CLI means… I hate acronyms) that…

Introduction In Part 1 of this article, I walked through the various components that we’ll need to build to create a responsive calendar feed web part that mimics the out-of-the-box SharePoint events web part. In this article, we’ll: Create a web part solution Add a mock service to return test events, and We’ll display a simple list of events The final product will look like this: Creating a web part solution If you haven’t done so yet, set up your SharePoint Framework development environment following Microsoft’s awesome instructions. We’ll create a solution called react-calendar-feed-1. In future articles, we’ll take what we built in this article as the foundation for react-calendar-feed-2, and so on until we’re done with the solution, which we’ll call react-calendar-feed. Of course, you can skip all the steps and get the code for the final solution, if you’d like. When you’re ready to create the solution, use…

Introduction Last week, I attended the SharePoint 2018 Conference in Las Vegas. There were a lot of cool announcements and demos. The SharePoint team rocks! One of the cool things that I noticed which has nothing to do with SharePoint was that a lot of presenters who showed code had a really cool command prompt that showed the node module they were in, and their Git branch status in a pretty “boat chart”. I had seen this many times before, but never realized how much easier it was to get a sense of what’s going on until I was watching someone else code on a big screen. Of course, I set out to find and configure this awesome command-line on my workstation. This article will show you how you too can install and configure this command line interface. Cmder During Vesa’s awesome session, I paid close attention to the title…

Introduction One of the premises of SPFx is that, with it, third-party developers have the same set of tools that the SharePoint team has. So, if you like the look of an out-of-the-box web part you can, in theory, reproduce the same look and feel yourself. A friend of mine needed to display a list of upcoming events, but the events are coming from a WordPress site that uses the WP Fullcalendar widget. They also really liked the look of events in SharePoint. So, I thought: why not try re-creating the out-of-the-box SharePoint events web part, but instead of reading events from a SharePoint list (or group calendar), it would read from WordPress? Since I was taking the challenge, I decided to also try to do these extra features: Read events from multiple event providers, including RSS, iCal, and WordPress. Support additional event providers without having to re-design the entire…

Why would you want to inject CSS? Since Microsoft introduced Modern Pages to Office 365 and SharePoint, it is really easy to create beautiful sites and pages without requiring any design experience. If you need to customize the look and feel of modern pages, you can use custom tenant branding, custom site designs, and modern site themes without incurring the wrath of the SharePoint gods. If you want to go even further, you can use SharePoint Framework Extensions and page placeholders to customize well-known areas of modern pages. Right now, those well-known locations are limited to the top and bottom of the page, but I suspect that in a few weeks, we’ll find out that there are more placeholder locations coming. But what happens when your company has a very strict branding guideline that requires very specific changes to every page? When your customization needs go beyond what’s supported in…

In part 1 of this article, I introduced the concept for an SPFx extension that adds a header to every page, showing the classification information for a site. In part 2, we created an SPFx extension that adds a header that displays a static message with the security classification of a site. In part 3, we learned more about property bags and learned a few ways to set the sc_BusinessImpact property (a property we made up) of our test sites to LBI, MBI, and HBI. In part 4, we wrote the extension that reads from a site’s property bags and displays the classification in the header. In this part, we will clean up a few things, package and deploy the extension. Preparing to deploy to production The extension we wrote in parts 1-4 of this article works, but it isn’t really production ready. First, we’ll want to change the code…

In part 1 of this article, I introduced the concept for an SPFx extension that adds a header to every page, showing the classification information for a site. In part 2, we created an SPFx extension that adds a header that displays a static message with the security classification of a site. In part 3, we learned more about property bags and learned a few ways to set the sc_BusinessImpact property (a property we made up) of our test sites to LBI, MBI, and HBI. In this part, we will finally get to add code to our extension that reads the property bag of the current site and displays the appropriate site classification label. Reading the classification from the site’s property bag You can get the property bag of a site using a simple REST call to https://yourtenant.sharepoint.com/sites/yoursite/_api/web/allProperties but it is even easier to use the SP PnP JS library make…

In part 1 of this article, I introduced the concept for an SPFx extension that adds a header to every page, showing the classification information for a site. In part 2, we created an SPFx extension that adds a header that displays a static message with the security classification of a site. Yes, static. As in hard-coded. I try to write these articles for people who don’t have as much experience with developing SPFx extensions, so I included the step-by-step instructions. In this article, we’ll discuss how we use property bags to store the security classification. What are property bags anyway? Property bags is a term used when describing a serialized list of properties. It isn’t unique to SharePoint — I remember using them in the good old C days, but SharePoint has been using them for a long time. Remember this screen from SharePoint Designer? Property bags are a convenient…

In part 1 of this article, I introduced the concept for an SPFx extension that adds a header to every page, showing the classification information for a site. We’ll actually do the coding in this article! Creating the SPFx extension solution Using the command line, create a new project directory md classification-extension Change the current directory to your new project directory cd classification-extension Launch the Yeoman SharePoint Generator: yo @Microsoft/sharepoint When prompted for the solution name, accept the default classification-extension. For the baseline package select SharePoint Online only (latest). When asked Where do you want to place the files? accept the default Use the current folder. When asked if you want to allow the tenant admin the choice of being able to deploy the solution to all sites immediately respond Yes (unless you really want to deploy it to every single site manually). When asked for the type of client-side component to create select Extension. Select Application Customizer when…

Value proposition As an independent consultant, I get to work with a lot of organizations in both public and private sectors. Most deal with various levels of security classification. Governance is always a hot topic with SharePoint. Most understand the importance of governance; some shrug it off as a “we’ll deal with it when it becomes a problem” — which is never a good idea, as far as I’m concerned. But what if we could make applying governance in SharePoint a lot easier? So easy, in fact, that it would be more painful to deal with it when it becomes a problem. That’s what I hope to do with this series of blog articles: demonstrate easy ways to introduce some level of governance using new enabling technologies — like SPFx web parts, extensions, and site scripts. My goal is not to duplicate the work of Microsoft and others; I may…

An awesome part of SPFx is the ability to create SharePoint Framework Extensions. At the time of this writing, you can write three types of SPFx extensions: Application customizers: to add scripts to pages and access HTML to predefined (well-known) HTML elements. At the moment, there are only a few page placeholders (like headers and footers), but I’m sure the hard-working SPFx team will announce new ones soon enough. For example, you can add your own customized copyright and privacy notices at the bottom of every modern page. Field customizers: to change the way fields are rendered within a list. For example, you could render your own sparkline chart on every row in a list view. Command sets: to add commands to list view toolbars. For example, you could add a button to perform an action on a selected list item. This articles doesn’t try to explain how to create…

As the World’s Laziest Developer, I don’t like to invent anything new if I can find something that already exists (and meets my needs). This article is a great example of that mentality. I’m really standing on the shoulder of giants and combining a few links and re-using someone else’s code (with credit, of course) to document what my approach to versioning SPFx packages is, with the hope that it helps someone else. CHANGELOG.md: a standard way to communicate changes that doesn’t suck The problem with change logs There are a few ways to communicate changes when working on a project: you can use your commit log diffs, GitHub Releases, use your own log, or any other standard out there. The problem with commit log diffs is that, while comprehensive, they are an automated log of changes that include every-single-change. Log diffs are great for documenting code changes, but if you…

This is an easy one, but I keep Googling it. When you create an SPFx web part, the default Property Pane automatically submits changes to the web part. There is no “Apply” button. But sometimes you don’t want changes to the property pane fields to automatically apply. All you have to do is to add this method in your web part class (just before getPropertyPaneConfiguration is where I like to place it): protected get disableReactivePropertyChanges(): boolean { return true; } When you refresh the web part, your property pane will sport a fancy Apply button! Property changes in the property pane will only get applied when users hit Apply. That’s it!

Learn how you can use the new SharePoint Hub sites with detailed step-by-step instructions

How to solve the “this property cannot be set after writing has started.” error when calling OpenBinaryDirect

In my previous article, I discuss best practices on how to choose high resolution photos to use in user profile pictures for Office 365. You can upload user profile pictures using the Office 365 Admin Center. It may be obvious to everyone else, but I didn’t know this was possible until a very astute coop student showed me this feature (after I spent an afternoon telling him the only way to do this was to use PowerShell). So, to save you the embarrassment, here is the web-based method: From the Office 365 Admin Center (https://portal.office.com) go to Admin then Exchange. In the Exchange Admin Center click on your profile picture and select Another User…. from the drop-down menu that appears. The system will pop-up a window listing users in your Office 365 subscription. Search for the user you wish to change and click OK. The system will pop-up the user’s profile, indicating that you…

In Office 365, you can upload profile pictures for each user’s contact card. The contact card will appear in Outlook, SharePoint, Lync, Word, Excel, PowerPoint… well, in any Office product that displays contact cards 🙂 While this isn’t a new concept to Office 2013, and this feature is available in On Premise installations, these articles focus on Office 365. There are two ways to achieve this: Via the web-based graphical user interface; or Using PowerShell You’ll find all sorts of confusing information online regarding the dimensions, file size and format restrictions. I found that either of the two methods described in this article will work with almost any file sizes and dimensions. There are, however, some best practices. Choose Square Photos Choose a square image as the source (i.e.: same width and height), otherwise the picture will be cropped when you upload and you may end up with portions of…

Although you can use the web-based GUI to update profile pictures on Office 365, sometimes you need to upload many pictures at once. This is where PowerShell comes in handy. Here are the instructions to upload high resolution user profile pictures to Office 365 using PowerShell commands: Launch the PowerShell console using Run as Administrator In the PowerShell console, provide your Office 365 credentials by typing the following command and hitting Enter: $Creds = Get-Credential You’ll be prompted to enter your credentials. Go ahead, I’ll wait. Create a PowerShell remote session to Office 365/Exchange by entering the following command and hitting Enter: $RemoteSession = New-PSSession -ConfigurationName Microsoft.Exchange -ConnectionUri https://outlook.office365.com/powershell-liveid/?proxymethod=rps -Credential $Creds -Authentication Basic -AllowRedirection Initialize the remote session by entering: Import-PSSession $RemoteSession Doing so will import all the required Cmdlets to manage Exchange – this is why you don’t need to install any Exchange PowerShell modules or anything…